Blog

Keeping IPs alive without keepalived

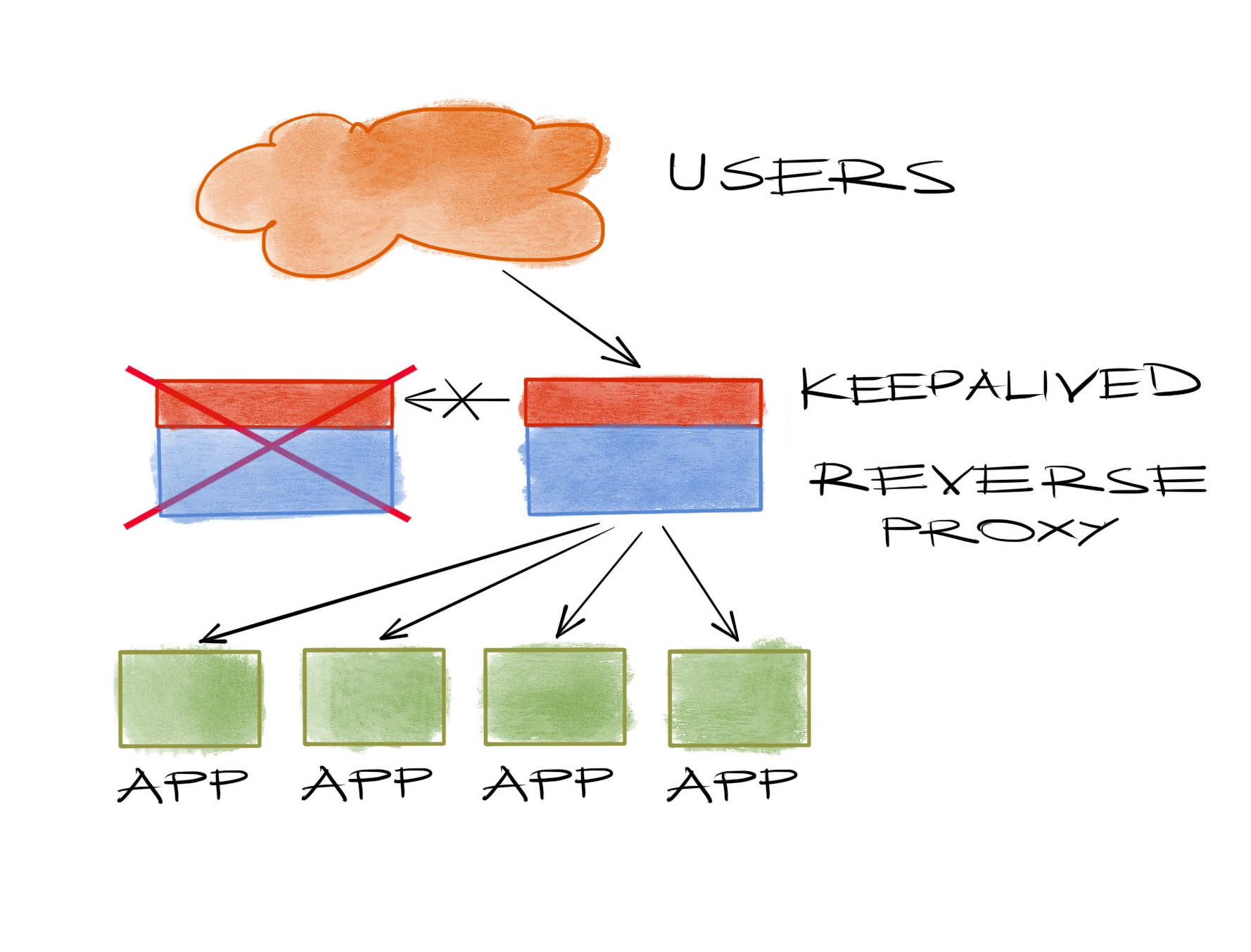

We all have a service that we run with multiple instances of the same application, to keep it available even when one of them goes down. When we do that, we usually deploy a reverse proxy (or a load balancer) to direct the users of this service to the instances.

A journey through the write caches

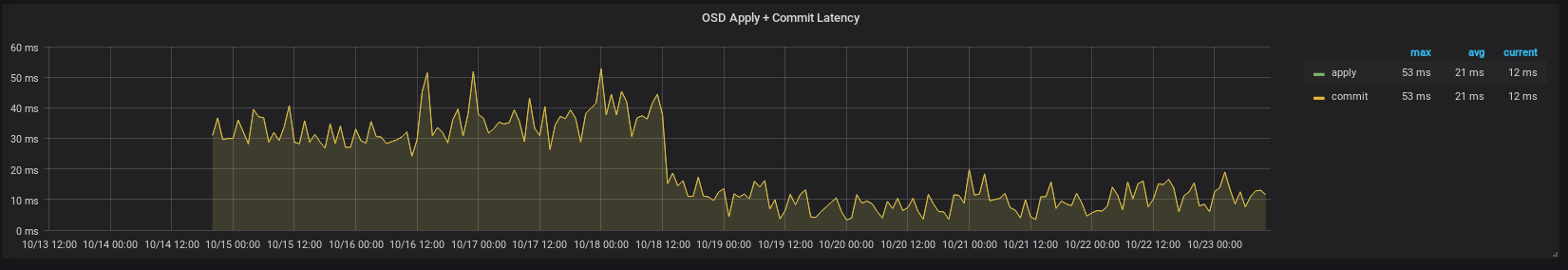

As is customary for any hardware, we had to test our new drives in one of our latest deployments, especially how they are going to behave w.r.t. write caching. This specific deployment had RAID controllers set in JBOD mode, exposing all drives directly to the operating system, which we use as bluestore ceph osds.

Heaviness of large ceph clusters

Data capacity is the first thing that comes to mind while talking about large ceph clusters, or data storage systems in general. The number of drives is another measure to think about. And sometimes maximum iops is something to look out for, especially while considering a full-flash / nvme cluster. But heaviness? What does that even mean?

Simple networking for bare-metal kubernetes

One of the most important and also the least understood part of kubernetes is cluster networking. I guess you already read through that and now you’re a bit confused about how to implement the required model, considering you were presented with about ~30 different options. A bit more googling would reduce that number to a few more popular choices. Nevertheless container networking is an involved subject and it’s easy to get lost in the details of any specific solution.